- Published on

The Role of Stochastic Processes in Data Science Innovation

- Authors

- Name

- Victor Oketch Sabare

- @sabare12

The Role of Stochastic Processes in Data Science Innovation

Introduction

The ability to model and predict outcomes in environments riddled with uncertainty is paramount. At the heart of this challenge lies the mathematical concept of stochastic processes—a family of random variables that encapsulate the probabilistic dynamics of systems over time. Stochastic processes are the mathematical tools that allow data scientists to harness and make sense of randomness, providing a framework for understanding complex, unpredictable phenomena.

A stochastic process is formally defined as a collection of random variables indexed by time or space, representing the evolution of some system of random values over time. These processes are crucial in data science for modeling sequences of events where exact predictions are impossible due to the inherent randomness in the underlying processes. They enable the quantification of uncertainty and the construction of models that can learn from and adapt to the stochastic nature of real-world data.

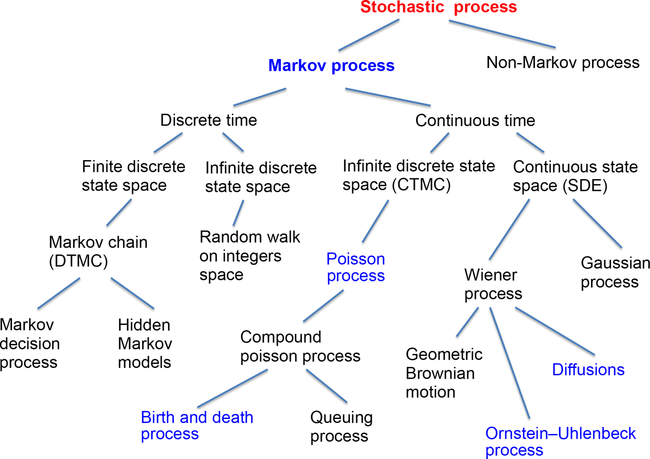

There are several types of stochastic processes, each with its unique properties and applications:

Markov Chains: These are processes that exhibit the "memoryless" property, where the future state depends only on the current state and not on the sequence of events that preceded it. Markov chains are widely used in queueing theory, stock price analysis, and Markov Chain Monte Carlo (MCMC) methods in Bayesian data analysis.

Poisson Processes: These are counting processes that model the occurrence of events over time, where each event happens independently of the previous one. Poisson processes are often used in fields such as telecommunications, insurance, and finance to model random events like incoming calls, insurance claims, or financial transactions.

Brownian Motion: Also known as Wiener processes, these continuous-time stochastic processes model the random motion of particles suspended in a fluid, a phenomenon first observed by botanist Robert Brown. In data science, Brownian motion is foundational to the theory of stochastic calculus and is used in various financial models, including the famous Black-Scholes option pricing model.

The objectives of this article are to elucidate the advanced applications of stochastic processes in data science, to provide a comprehensive understanding of how these mathematical constructs are employed to solve real-world problems, and to discuss the implications of stochastic modeling in future technological advancements. By examining the significance of stochastic processes in practical data analysis, we aim to highlight their indispensable role in making informed decisions in the face of uncertainty and in developing robust predictive models.

As we venture into the depths of stochastic processes and their applications, we will uncover the profound impact they have on the methodologies and outcomes of data science projects. From optimizing algorithms to forecasting unpredictable market trends, stochastic processes are the unsung heroes in the data scientist's toolkit, enabling us to navigate through the randomness and extract meaningful insights from the chaos.

Stochastic Processes in Machine Learning

Stochastic processes play a pivotal role in the development and optimization of machine learning algorithms. Their inherent randomness is not a limitation but a feature that enables algorithms to navigate complex, nonlinear solution spaces effectively. This section explores the utilization of stochastic processes in machine learning, focusing on stochastic gradient descent and Markov Decision Processes (MDPs) in reinforcement learning.

Stochastic Gradient Descent

Stochastic Gradient Descent (SGD) is a cornerstone optimization method in machine learning, particularly in the training of deep neural networks. Unlike traditional gradient descent, which uses the entire dataset to compute the gradient of the loss function, SGD updates the model's parameters using only a randomly selected subset of data for each iteration. This stochastic nature helps in several ways:

- Efficiency: SGD significantly reduces the computational burden, making it feasible to train on large datasets.

- Avoiding Local Minima: The randomness in SGD helps to avoid getting trapped in local minima, a common issue in high-dimensional optimization problems[1].

- Generalization: By using different subsets of data for each iteration, SGD can help prevent overfitting, leading to models that generalize better to unseen data.

Markov Decision Processes in Reinforcement Learning

Markov Decision Processes (MDPs) provide a mathematical framework for modeling decision-making in situations where outcomes are partly random and partly under the control of a decision-maker. MDPs are central to reinforcement learning (RL), a type of machine learning where an agent learns to make decisions by performing actions and receiving feedback in the form of rewards or penalties.

- Decision Making Under Uncertainty: MDPs model the environment in RL as a stochastic process, where the next state and the reward depend probabilistically on the current state and the action taken by the agent.

- Policy Optimization: In RL, the goal is to find a policy (a mapping from states to actions) that maximizes some notion of cumulative reward. MDPs provide the theoretical underpinning for algorithms that seek to optimize such policies in stochastic environments.

Applications and Implications

The application of stochastic processes in machine learning extends beyond SGD and MDPs. From stochastic gradient boosting, which builds an ensemble of decision trees on randomly selected subsets of the training data, to generative models that simulate complex data distributions, stochastic processes are integral to a wide range of machine learning techniques.

The stochastic nature of these processes and algorithms mirrors the uncertainty inherent in real-world data and environments. By embracing randomness, machine learning models can achieve robustness, adaptability, and scalability. This makes stochastic processes not just a mathematical curiosity but a practical necessity for advancing machine learning and data science.

Stochastic processes are indispensable in the realm of machine learning, providing the tools and frameworks necessary for dealing with uncertainty and complexity. As machine learning continues to evolve, the role of stochastic processes is likely to grow, driving innovation and enabling new applications across diverse domains.

Financial Data Analysis

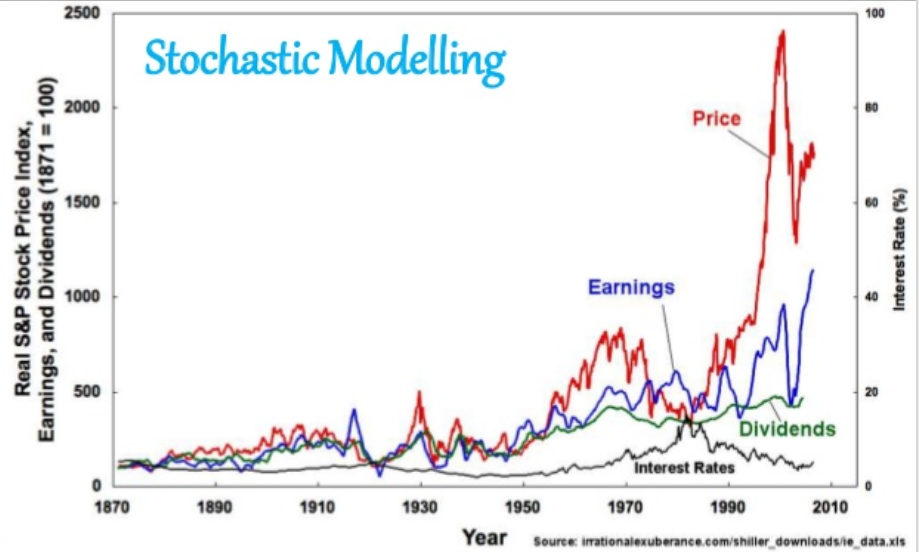

The application of stochastic processes in financial data analysis is a testament to the intricate relationship between mathematical models and economic realities. These processes are essential for capturing the inherent uncertainty and dynamic behavior of financial markets.

Stochastic Processes in Modeling Financial Markets

Financial markets are characterized by their unpredictability, with asset prices and market movements influenced by a myriad of factors ranging from macroeconomic shifts to investor sentiment. Stochastic processes, such as geometric Brownian motion (GBM), have been widely adopted to model this randomness in stock prices and other financial instruments. GBM, in particular, is favored for its simplicity and tractability, capturing the continuous evolution of prices and the unpredictable nature of their changes over time.

Stochastic Differential Equations in Option Pricing

Stochastic differential equations (SDEs) are a cornerstone of financial modeling, particularly in the realm of derivative pricing. The Black-Scholes model, one of the most celebrated achievements in financial economics, employs an SDE to price European options. This model assumes that the underlying asset follows a GBM, leading to a partial differential equation that can be solved to find the option's price. The Black-Scholes formula revolutionized financial markets by providing a theoretical framework for pricing options, taking into account factors such as the underlying asset's volatility and the time to expiration.

Monte Carlo Simulations in Risk Assessment and Portfolio Optimization

Monte Carlo simulations are a powerful stochastic technique used to assess risk and optimize portfolios. By simulating thousands of possible market scenarios, these simulations can provide a probabilistic distribution of potential outcomes for an investment portfolio. This allows financial analysts to quantify the risk and estimate the likelihood of various returns, which is invaluable for making informed investment decisions and for strategic asset allocation. Monte Carlo simulations are particularly useful in complex financial instruments where analytical solutions may not be available or are difficult to derive.

Stochastic processes, SDEs, and Monte Carlo simulations are integral to financial data analysis, providing the tools necessary to model the uncertainty and complexity of financial markets. These methods enable analysts to forecast future price movements, value derivatives, assess risk, and optimize portfolios, thereby playing a crucial role in the financial industry's decision-making processes.

Predictive Analytics and Uncertainty Quantification

The inherent unpredictability of numerous systems, from natural phenomena to human behavior, poses significant challenges and opportunities for data science. Stochastic modeling, with its foundation in probabilistic rules and randomness, offers a powerful approach to understanding and navigating the uncertainty that characterizes these systems. This section highlights the critical role of stochastic processes in modeling systems where noise and uncertainty are not just present but are fundamental characteristics.

The Importance of Stochastic Modeling

Stochastic models are essential for systems where deterministic approaches fall short. Unlike deterministic models, which predict a single outcome based on a set of initial conditions, stochastic models acknowledge the inherent randomness in systems and provide a range of possible outcomes, each with its probability. This probabilistic approach is crucial for making informed decisions under uncertainty, allowing for the assessment of risks and the exploration of different scenarios.

Case Studies

Climate Modeling: Climate systems are inherently complex and chaotic, with countless variables interacting in unpredictable ways. Stochastic processes have been instrumental in improving climate models, particularly in predicting extreme weather events and understanding climate variability. By incorporating randomness into models, scientists can better capture the uncertainty in future climate conditions, leading to more robust predictions and strategies for mitigation and adaptation.

Traffic Flow Analysis: The dynamics of traffic flow are influenced by numerous random factors, including driver behavior, road conditions, and vehicle interactions. Stochastic models, such as the cellular automata model for traffic flow, use probabilistic rules to simulate the randomness in traffic patterns. These models have been crucial in optimizing traffic management systems, reducing congestion, and improving road safety.

Epidemiology: The spread of infectious diseases is a stochastic process, influenced by random interactions between individuals and the probabilistic nature of disease transmission. Stochastic models have been pivotal in epidemiology, especially in the context of the COVID-19 pandemic, for predicting the spread of the virus, assessing the impact of public health interventions, and planning resource allocation. These models provide insights into the potential trajectories of an outbreak and the effectiveness of containment strategies.

Embracing Uncertainty

The case studies above underscore the transformative impact of stochastic processes in understanding and managing complex systems. By embracing uncertainty, stochastic modeling enables the exploration of a wide range of possibilities, providing a more nuanced understanding of systems that are inherently unpredictable. This approach is not about eliminating uncertainty but about quantifying it and incorporating it into decision-making processes.

Stochastic processes are indispensable in the realm of data science, especially in fields where uncertainty is a defining feature. The ability to model randomness and make probabilistic predictions is crucial for navigating the complexities of the natural and social worlds. As data science continues to evolve, the application of stochastic processes will undoubtedly expand, offering new insights and solutions to some of the most challenging problems.

Computational Considerations

Working with stochastic processes presents unique computational challenges that stem from their inherent randomness and the complexity of the systems they model. This section discusses these challenges, the state-of-the-art algorithms, and software packages that facilitate the simulation and analysis of stochastic models, and the role of high-performance computing in managing large-scale stochastic simulations.

Challenges in Stochastic Computations

The computational problems associated with stochastic processes often involve calculating properties of statistical models where one has to deal with the randomness of the system. This can include generating random variables with specific distributions, simulating stochastic processes, or estimating the parameters of these processes from data. The challenges are compounded when dealing with high-dimensional spaces or when the models require the integration over all possible paths a process could take, which is computationally intensive and often infeasible to perform exactly.

State-of-the-Art Algorithms and Software

To address these computational demands, several algorithms and software packages have been developed:

- Monte Carlo Methods: These are used for simulating random variables and stochastic processes, and for estimating integrals. They are particularly useful when dealing with high-dimensional problems where analytical solutions are not available[1].

- Stochastic Approximation Algorithms: These include the aforementioned stochastic gradient descent, which is used for optimizing parameters in machine learning models[1].

- Software Packages: There are numerous software packages designed for stochastic simulation, such as R's suite of packages for probabilistic modeling, Python's NumPy and SciPy for numerical computations, and specialized software like MATLAB's Statistics and Machine Learning Toolbox.

High-Performance Computing (HPC)

High-performance computing is crucial for handling large-scale stochastic simulations, especially in fields that develop modeling and simulation workflows with stochastic execution times and unpredictable resource requirements. HPC systems can significantly improve system utilization and application response time through techniques like speculative scheduling, which adapts the resource requirements of a stochastic application on-the-fly based on its past execution behavior.

The Importance of HPC

The importance of HPC in stochastic processes cannot be overstated. It enables the efficient execution of complex simulations that would otherwise be intractable. By leveraging the power of HPC, researchers and practitioners can perform more accurate and detailed analyses, leading to better understanding and prediction of stochastic systems.

Computational considerations are a critical aspect of working with stochastic processes. The challenges are met with advanced algorithms and software that enable simulation and analysis, while high-performance computing provides the necessary infrastructure to handle the scale and complexity of these tasks. As stochastic modeling continues to grow in importance across various domains, the reliance on sophisticated computational resources will only increase.

Future Directions

The future of stochastic processes in data science is poised to be shaped by their integration with emerging technologies and the continued need for interdisciplinary research. This section speculates on the potential applications and developments in this field.

Integration with Emerging Technologies

Quantum Computing: The potential integration of stochastic processes with quantum computing could lead to significant advancements in computational capabilities. Quantum algorithms may enable the simulation of complex stochastic systems more efficiently than classical computers, opening new avenues for modeling and problem-solving in areas such as finance, logistics, and materials science.

Deep Learning: Stochastic processes are also likely to play a crucial role in the next generation of deep learning models. By incorporating stochastic elements into neural networks, such as stochastic neurons or layers, deep learning can become more robust to noise and capable of capturing the uncertainty inherent in real-world data.

Interdisciplinary Research

The advancement of stochastic processes in data science will benefit greatly from interdisciplinary research. Collaboration between mathematicians, computer scientists, engineers, and domain experts can lead to the development of more sophisticated models that are both computationally feasible and highly applicable to real-world problems.

IoT and Sensor Networks: In the realm of the Internet of Things (IoT), optimizing the placement and operation of sensor networks is a problem well-suited for stochastic models. These models can account for the random nature of environmental factors and sensor readings, leading to more efficient and accurate data collection systems.

Quantitative Finance: Stochastic processes will continue to evolve in quantitative finance, not only for modeling price movements but also for developing new financial instruments and risk management strategies. As markets become more complex, the need for advanced stochastic models that can capture this complexity increases.

Healthcare and Epidemiology: In healthcare, stochastic models can help in understanding the spread of diseases, the development of new drugs, and the optimization of healthcare delivery. The COVID-19 pandemic has underscored the importance of stochastic modeling in predicting disease spread and evaluating intervention strategies.

The future of stochastic processes in data science is bright, with numerous opportunities for innovation through the integration with emerging technologies and interdisciplinary research. As data science continues to push the boundaries of what is possible, stochastic processes will remain a key tool for modeling the uncertainty and complexity that characterize many aspects of the world around us.

Conclusion

Throughout this article, we have explored the multifaceted role of stochastic processes in data science, delving into their theoretical foundations, applications in machine learning and financial data analysis, and their significance in systems characterized by inherent uncertainty. We have also discussed the computational considerations essential for working with stochastic models and speculated on the future directions of stochastic processes in data science, highlighting their integration with emerging technologies and the importance of interdisciplinary research.

Stochastic processes, with their ability to model randomness and uncertainty, are indispensable in the data science toolkit. They provide a robust framework for understanding and predicting complex phenomena across various domains, from financial markets and climate modeling to epidemiology and beyond. The application of stochastic processes in machine learning, through techniques like stochastic gradient descent and Markov Decision Processes, underscores their transformative potential in developing algorithms that are efficient, adaptable, and capable of handling the intricacies of real-world data.

The computational challenges associated with stochastic processes underscore the need for advanced algorithms, software packages, and high-performance computing to simulate and analyze complex stochastic models effectively. As we look to the future, the integration of stochastic processes with quantum computing and deep learning promises to unlock new capabilities in data analysis, offering more sophisticated models that can better capture the uncertainty inherent in the data.

Appreciate the profound impact of stochastic processes in data science. By embracing randomness and uncertainty, data scientists can develop more nuanced, accurate, and predictive models. The journey into the realm of stochastic processes is not just about tackling mathematical complexities; it's about unlocking the potential to understand and shape the world in ways previously unimaginable.

As we continue to advance in the field of data science, let us not shy away from the challenges posed by randomness and uncertainty. Instead, let us harness the power of stochastic processes to drive innovation, enhance decision-making, and uncover deeper insights into the complex systems that define our world.

References

The comprehensive list of academic papers, books, and online resources cited throughout the article is as follows:

Christo Ananth, N. Anbazhagan, Mark Goh. "Stochastic Processes and Their Applications in Artificial Intelligence" (Book). Engineering Science Reference, July 10th, 2023. ISBN: 9781668476802.

Wikipedia. "Stochastic process" (Online Resource). Available at: https://en.wikipedia.org/wiki/Stochastic_process.

"Stochastic Processes and their Applications" (Journal). ScienceDirect. Available at: https://www.sciencedirect.com/journal/stochastic-processes-and-their-applications.

"Statistical Inference for Stochastic Processes" (Journal). Springer. Available at: https://link.springer.com/journal/11203.

İsmail Güzel. "Classification of Stochastic Processes with Topological Data Analysis" (Research Paper). arXiv, June 8, 2022. Available at: https://arxiv.org/abs/2206.03973v1.

These sources have been instrumental in providing a comprehensive understanding of stochastic processes and their applications in various domains, as discussed in the article.

About the Author

Victor Sabare is a data scientist and researcher learning about stochastic processes and their applications in machine learning, finance, and predictive analytics. He is currently pursuing a degree in Data Science and Analytics. Victor is committed to advancing the field of data science through interdisciplinary research and the integration of emerging technologies. He can be reached at his email.